Counting Events and Participants within Highly Ambiguous Data covering a very long tail

SemEval-2018 Task 5

To see a detailed task description and to participate in this task, please visit the official competition page on Codalab.

Task Summary

News continuously report on many different events but can NLP systems actually tell one event from the other within large streams of data? Especially when we consider long-tail events of the same type on which little information is available, NLP systems do a poor job of determining the quantity of participants of events, and there are essentially no NLP systems that can determine the number of times a given type of event has taken place in a corpus.

We are hosting a “referential quantification” task that requires systems to provide answers to questions about the number of incidents of an event type (subtasks S1 and S2) or participants in roles (subtask S3).

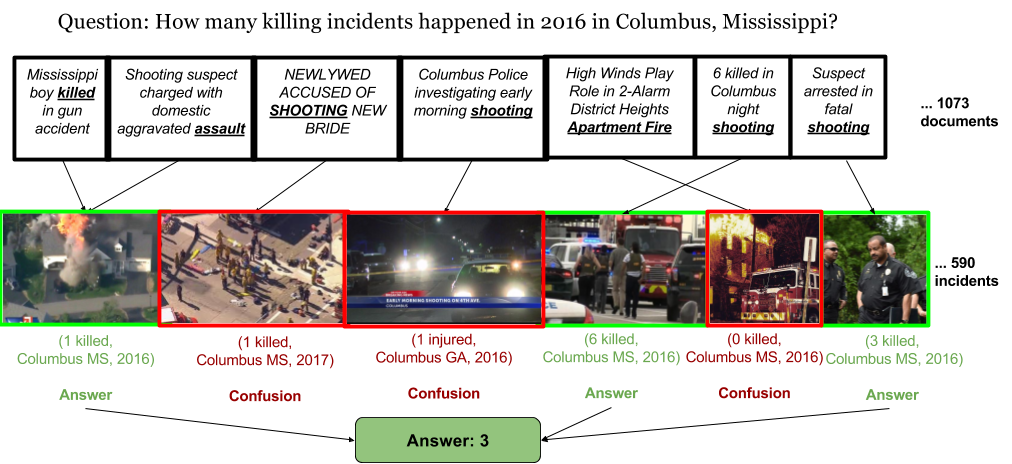

Given a set of questions and corresponding documents, the participating systems need to provide a numeric answer together with the supporting documents and text mentions of the answer events in the documents. To correctly answer each question, participating systems must be able to to establish the meaning, reference, and identity (i.e. coreference) of events and participants in news articles. A schematic example of the S2 challenge is given below:

The data (texts and answers) are prepared in such a way that the task deliberately exhibits large ambiguity and variation, as well as coverage of long tail phenomena by including a substantial amount of low-frequent, local events and entities.

Data

Domains – Our data covers three domains: gun violence, fire disasters, and business.

Document representation – For each document, we provide its title, content (tokenized), and creation time.

Question representation – We will provide the participants with a structured representation of each question. Example:

- Subtask: S2

- Event type: killing

- Time: 6-2014

- Location:wiki:Wilmington,_Los_Angeles

- Document IDs: 13, 22, 85, 298, …

Answer representation Based on our tokenized document input, systems will generate a CoNLL-like format, in which they indicate which mentions refer to which incidents. The number of unique incident identifiers determines the system answer.

Subtasks

This SemEval-2018 task is designed as three incrementally harder subtasks:

- Subtask S1 Find the single event that answers the question (Which killing incident happened in 2014 in Columbus, OH?)

- Subtask S2 Find all events (if any) that answer the question (How many killing incidents happened in 2016 in Columbus, MS?)

- Subtask S3 Find all participant-role relations that answer the question (How many people were killed in 2016 in Columbus, MS?)

Evaluation & Baselines

For each question, systems submit a CoNLL file, consisting of a set of incidents with their corresponding documents and event mentions. We perform both extrinsic and intrinsic evaluation over the system output:

- Incident-level evaluation – For all questions, we compare the number of incidents provided by the system against the gold standard answers from our structured data.

- Document-level evaluation – We will also test to which extent systems are able to find which documents describe a certain incident. We compare the set of documents provided by the system against the set of gold documents.

- Mention-level evaluation – For a selected subset of the questions, we will evaluate event coreference on a mention level, by using the standard metrics to evaluate event coreference sets (MUC, BLANC, CEAF, B3, CoNLL) and their open-source implementation found on github.

We will provide the following baselines:

- Surface form baseline This baseline uses surface forms of question components to find answer incidents in data. For instance, if a question concerns injuries in Texas in the year of 2014, this baseline looks for documents that mention: “2014”, “Texas” and “injury”.

- Combination of surface form filtering and document clustering This baseline uses the output of the surface form-based baseline as input and cluster the selected documents into coreferential sets based on textual similarity.

Organizers & Contact

Marten Postma (m.c.postma@vu.nl)

Filip Ilievski (f.ilievski@vu.nl)

Piek Vossen (piek.vossen@vu.nl)

Important Dates

August 14, 2017 – Trial data ready

December 1, 2017 – Test data ready

January 8-29, 2018 – Evaluation

February 28, 2018 – Paper submission due

April 30, 2018 – Camera ready version

Summer 2018 – SemEval workshop

Intent to Participate?

Please fill this Google form to stay in touch!